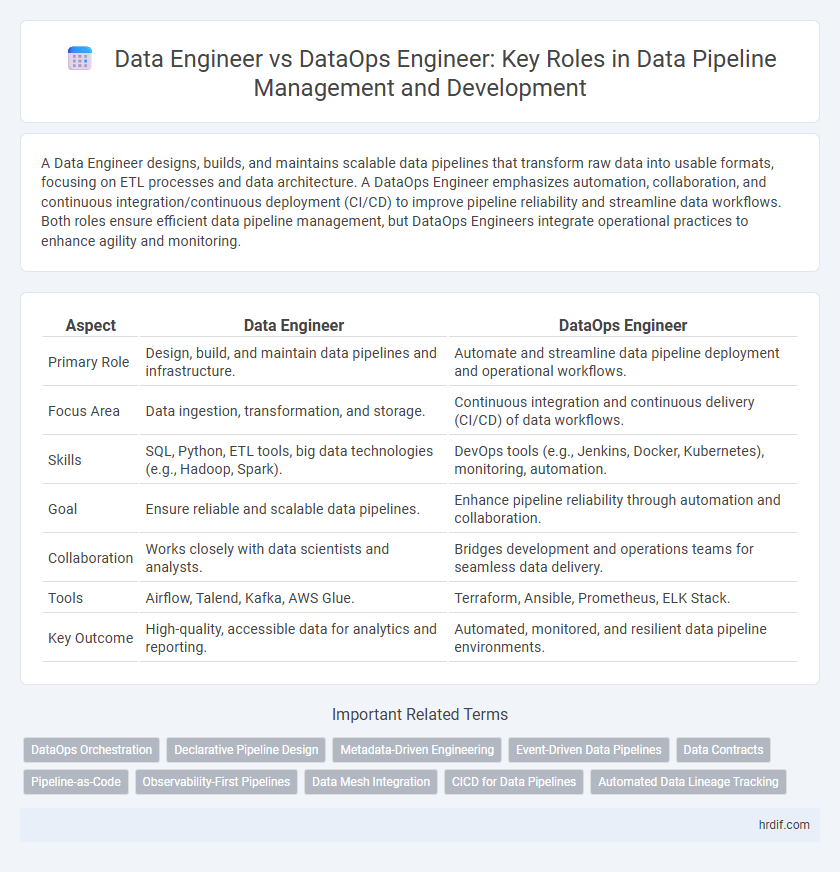

A Data Engineer designs, builds, and maintains scalable data pipelines that transform raw data into usable formats, focusing on ETL processes and data architecture. A DataOps Engineer emphasizes automation, collaboration, and continuous integration/continuous deployment (CI/CD) to improve pipeline reliability and streamline data workflows. Both roles ensure efficient data pipeline management, but DataOps Engineers integrate operational practices to enhance agility and monitoring.

Table of Comparison

| Aspect | Data Engineer | DataOps Engineer |

|---|---|---|

| Primary Role | Design, build, and maintain data pipelines and infrastructure. | Automate and streamline data pipeline deployment and operational workflows. |

| Focus Area | Data ingestion, transformation, and storage. | Continuous integration and continuous delivery (CI/CD) of data workflows. |

| Skills | SQL, Python, ETL tools, big data technologies (e.g., Hadoop, Spark). | DevOps tools (e.g., Jenkins, Docker, Kubernetes), monitoring, automation. |

| Goal | Ensure reliable and scalable data pipelines. | Enhance pipeline reliability through automation and collaboration. |

| Collaboration | Works closely with data scientists and analysts. | Bridges development and operations teams for seamless data delivery. |

| Tools | Airflow, Talend, Kafka, AWS Glue. | Terraform, Ansible, Prometheus, ELK Stack. |

| Key Outcome | High-quality, accessible data for analytics and reporting. | Automated, monitored, and resilient data pipeline environments. |

Defining the Roles: Data Engineer vs DataOps Engineer

Data Engineers design, build, and maintain scalable data pipelines, ensuring efficient data extraction, transformation, and loading (ETL) processes essential for analytics and reporting. DataOps Engineers integrate development and operations practices, emphasizing automation, monitoring, and continuous integration/continuous deployment (CI/CD) to improve pipeline reliability and agility. While Data Engineers focus on pipeline architecture and data quality, DataOps Engineers optimize workflow collaboration and operational stability within data management environments.

Core Responsibilities in Data Pipeline Management

Data Engineers specialize in designing, building, and maintaining scalable data pipelines, focusing on data ingestion, transformation, and storage to ensure high-quality, reliable datasets. DataOps Engineers emphasize automation, monitoring, and orchestration of data workflows, integrating continuous integration and continuous deployment (CI/CD) practices to enhance pipeline efficiency and collaboration. Both roles prioritize data pipeline reliability but diverge in scope, with Data Engineers concentrating on infrastructure and DataOps Engineers on process optimization and operational excellence.

Skill Sets: Overlapping and Distinct Capabilities

Data Engineers specialize in building and optimizing scalable data pipelines using technologies like Apache Spark, Hadoop, and SQL, focusing on data ingestion, transformation, and storage. DataOps Engineers combine these skills with automation, continuous integration/continuous deployment (CI/CD) practices, and monitoring tools such as Jenkins, Terraform, and Kubernetes to ensure reliable and reproducible data workflows. Both roles require proficiency in programming languages like Python and expertise in cloud platforms, yet DataOps Engineers emphasize collaboration, process optimization, and operational stability within the data lifecycle.

Toolchains and Technologies Compared

Data Engineers primarily utilize ETL tools like Apache NiFi, Airflow, and Spark for building and managing data pipelines, focusing on data ingestion and transformation. DataOps Engineers integrate these tools with CI/CD platforms such as Jenkins, GitLab CI, and Docker to automate deployment, monitoring, and collaboration across the data lifecycle. Kubernetes and Terraform often support infrastructure orchestration in DataOps, enhancing pipeline scalability and reliability beyond traditional data engineering toolchains.

Workflow Differences in Data Pipeline Operation

Data Engineers primarily focus on building and maintaining data pipelines, emphasizing ETL (Extract, Transform, Load) processes, data modeling, and integration to ensure reliable data flow from sources to storage. DataOps Engineers concentrate on automation, monitoring, and collaborative workflow optimization, implementing continuous integration and continuous deployment (CI/CD) practices to enhance pipeline efficiency and reduce errors. Workflow differences highlight that Data Engineers create and optimize pipelines, while DataOps Engineers maintain operational stability and scalability through rigorous process automation and real-time analytics.

Automation and CI/CD in Data Engineering vs DataOps

Data Engineers focus on building and optimizing scalable data pipelines using automation tools to ensure efficient data ingestion, transformation, and storage processes. DataOps Engineers emphasize integrating continuous integration and continuous deployment (CI/CD) practices specifically tailored for data workflows, enabling faster, reliable, and collaborative data pipeline delivery. Automation in Data Engineering primarily targets data processing optimization, whereas DataOps leverages CI/CD to streamline pipeline testing, monitoring, and rapid iteration for overall data lifecycle management.

Impact on Data Reliability and Quality

Data Engineers design and build data pipelines focused on efficient data ingestion, transformation, and storage, directly influencing pipeline robustness and data accuracy. DataOps Engineers implement automated testing, monitoring, and continuous integration practices that enhance data reliability and ensure consistent data quality throughout the pipeline lifecycle. Together, these roles optimize data pipeline management by combining infrastructure development with operational excellence to reduce errors and improve trustworthiness of data outputs.

Collaboration with Other Data Teams

Data Engineers primarily focus on building and maintaining reliable data pipelines, ensuring data quality and availability for analytics and machine learning teams. DataOps Engineers emphasize automation, monitoring, and continuous integration to streamline collaboration between data engineering, data science, and operations teams. This collaborative approach reduces deployment time, minimizes errors, and enhances overall data pipeline efficiency.

Career Pathways: Growth, Demand, and Progression

Data Engineers specialize in building and maintaining scalable data pipelines, focusing on data architecture, ETL processes, and optimizing data storage solutions. DataOps Engineers integrate DevOps principles into data workflows, emphasizing automation, monitoring, and continuous integration/continuous deployment (CI/CD) to enhance pipeline reliability and collaboration. Career progression for Data Engineers often leads to roles in data architecture or solutions engineering, while DataOps Engineers advance towards leadership positions in data infrastructure or platform engineering, reflecting rising industry demand for streamlined, agile data operations.

Choosing the Right Role: Which Career Suits You?

Data Engineers specialize in designing, building, and maintaining scalable data pipelines with a strong focus on data architecture, ETL processes, and database management. DataOps Engineers emphasize automation, continuous integration, and collaboration between data teams to ensure efficient deployment and monitoring of data workflows. Choosing the right career depends on your preference for hands-on data infrastructure development versus orchestrating end-to-end data operations and process optimization.

Related Important Terms

DataOps Orchestration

DataOps Engineers specialize in orchestration by automating, monitoring, and optimizing data pipeline workflows to ensure seamless integration and continuous delivery, whereas Data Engineers focus primarily on building and maintaining scalable data pipelines. Through tools like Apache Airflow and Kubernetes, DataOps Engineers enhance collaboration between data teams, reduce pipeline failures, and accelerate deployment cycles, driving data reliability and operational efficiency.

Declarative Pipeline Design

DataOps Engineers prioritize declarative pipeline design to enable automated, scalable, and reproducible data workflows, leveraging infrastructure-as-code principles for seamless pipeline orchestration. In contrast, Data Engineers often focus on imperative scripting and manual configuration, which can lead to less flexible and maintainable data pipeline management.

Metadata-Driven Engineering

Data Engineers design and build scalable data pipelines focusing on data ingestion, transformation, and storage, while DataOps Engineers emphasize Metadata-Driven Engineering to automate pipeline deployment, monitoring, and versioning for enhanced data quality and operational efficiency. Metadata-driven frameworks enable DataOps to implement continuous integration and continuous delivery (CI/CD) in data pipelines, reducing manual intervention and accelerating iterative development cycles in complex data environments.

Event-Driven Data Pipelines

Data Engineers focus on designing and building scalable event-driven data pipelines using technologies like Apache Kafka and Apache Flink to ensure efficient data ingestion and processing in real-time. DataOps Engineers specialize in automating deployment, monitoring, and quality assurance of event-driven data pipelines, integrating CI/CD practices and orchestration tools such as Kubernetes to enhance pipeline reliability and speed.

Data Contracts

Data Engineers design and build scalable data pipelines, emphasizing ETL processes and data quality, while DataOps Engineers implement automated workflows and monitor pipeline health to ensure continuous integration and delivery. Data Contracts play a critical role by defining explicit agreements on data schemas and quality metrics, enabling collaboration between both roles to maintain reliable and consistent data flows.

Pipeline-as-Code

Data Engineers specialize in designing and building scalable data pipelines using Pipeline-as-Code frameworks to automate data ingestion and transformation processes. DataOps Engineers focus on integrating continuous integration and continuous deployment (CI/CD) practices within Pipeline-as-Code to enhance collaboration, monitoring, and automated testing for robust data pipeline management.

Observability-First Pipelines

Data Engineers design and build scalable data pipelines to ensure efficient data flow, whereas DataOps Engineers prioritize observability-first pipelines by integrating real-time monitoring, automated alerting, and continuous feedback loops to enhance pipeline reliability and performance. Emphasizing observability enables rapid issue detection and resolution, reducing downtime and improving data quality throughout the entire pipeline lifecycle.

Data Mesh Integration

Data Engineers design and build scalable data pipelines focused on ingestion and transformation within centralized systems, while DataOps Engineers automate and streamline pipeline operations with continuous integration and deployment practices crucial for Data Mesh integration. Data Mesh architecture demands DataOps Engineers to enable decentralized ownership and governance by orchestrating data products across distributed teams, enhancing reliability and collaboration.

CICD for Data Pipelines

Data Engineers build and maintain scalable data pipelines using technologies like Apache Spark, Hadoop, and SQL, focusing on data ingestion, transformation, and storage optimization. DataOps Engineers enhance these pipelines by implementing CI/CD practices with tools such as Jenkins, GitLab CI, and Airflow, enabling automated testing, deployment, and monitoring to ensure continuous integration and efficient delivery of reliable data workflows.

Automated Data Lineage Tracking

DataOps engineers specialize in automated data lineage tracking, utilizing advanced orchestration tools and metadata management systems to ensure real-time visibility and accuracy across complex data pipelines. Data engineers primarily build and maintain data pipelines, but often rely on manual or semi-automated processes for lineage tracking, limiting scalability and traceability in dynamic environments.

Data Engineer vs DataOps Engineer for data pipeline management. Infographic

hrdif.com

hrdif.com