On-premise development offers centralized control and security for processing solutions, ideal for organizations with strict data privacy requirements. Edge computing development brings processing closer to data sources, reducing latency and bandwidth usage, which is crucial for real-time applications. Choosing between on-premise and edge computing depends on factors such as data sensitivity, speed requirements, and infrastructure capabilities.

Table of Comparison

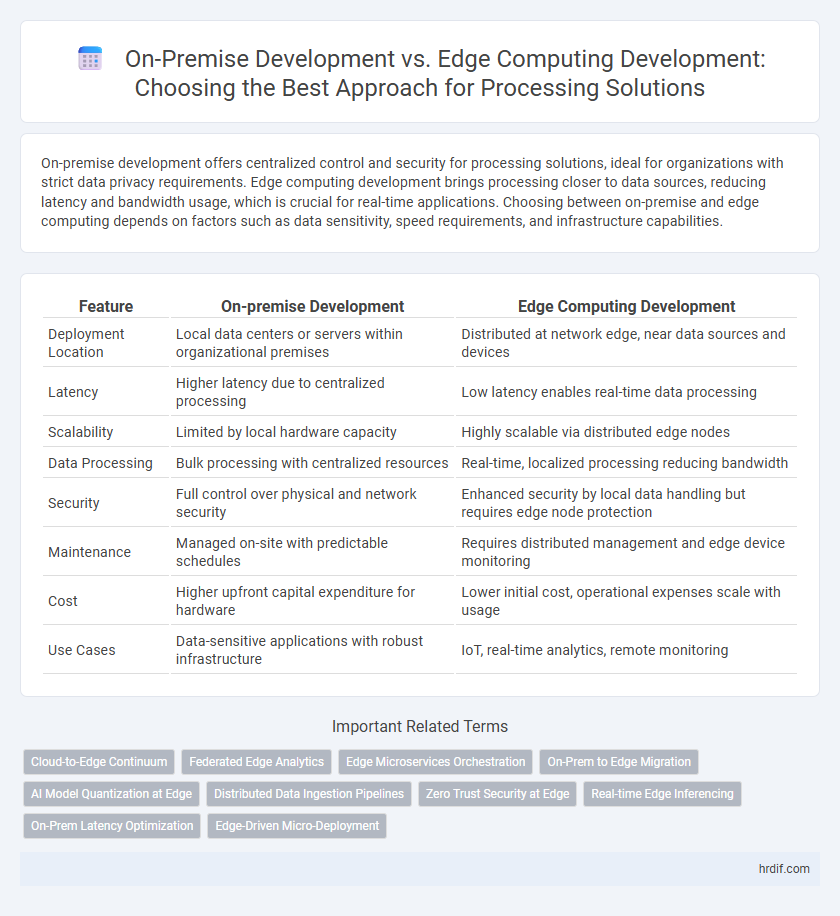

| Feature | On-premise Development | Edge Computing Development |

|---|---|---|

| Deployment Location | Local data centers or servers within organizational premises | Distributed at network edge, near data sources and devices |

| Latency | Higher latency due to centralized processing | Low latency enables real-time data processing |

| Scalability | Limited by local hardware capacity | Highly scalable via distributed edge nodes |

| Data Processing | Bulk processing with centralized resources | Real-time, localized processing reducing bandwidth |

| Security | Full control over physical and network security | Enhanced security by local data handling but requires edge node protection |

| Maintenance | Managed on-site with predictable schedules | Requires distributed management and edge device monitoring |

| Cost | Higher upfront capital expenditure for hardware | Lower initial cost, operational expenses scale with usage |

| Use Cases | Data-sensitive applications with robust infrastructure | IoT, real-time analytics, remote monitoring |

Understanding On-premise Development in Processing Solutions

On-premise development in processing solutions involves deploying and managing applications directly within an organization's local servers and infrastructure, enabling full control over data security and system customization. This approach reduces latency by processing data on-site, which is crucial for industries requiring real-time analytics and immediate response. Organizations benefit from compliance adherence and mitigated risks associated with cloud-based vulnerabilities, making on-premise development a preferred choice for sensitive and mission-critical data processing.

What is Edge Computing Development?

Edge computing development involves creating processing solutions that perform data computation near the source of data generation, minimizing latency and reducing bandwidth usage compared to centralized on-premise systems. This approach enhances real-time data processing capabilities for IoT devices, autonomous systems, and smart applications by distributing workloads across localized edge nodes. Developers must optimize software for constrained resources and ensure seamless integration with cloud infrastructures to maintain data consistency and security.

Key Differences Between On-premise and Edge Computing Development

On-premise development centralizes processing within localized data centers, offering high control and security but limited scalability and latency handling. Edge computing development distributes processing to the network edge, enabling real-time data analysis and reduced latency for IoT and mobile applications. Key differences include infrastructure deployment, data processing location, and responsiveness to dynamic workloads.

Required Skills for On-premise vs Edge Computing Developers

On-premise development requires strong expertise in traditional server management, hardware configuration, and network security protocols to maintain centralized data centers. Edge computing developers need advanced skills in distributed computing, real-time data processing, and integration of IoT devices for decentralized processing at the network edge. Mastery of containerization, microservices architectures, and low-latency communication protocols is critical for edge computing, contrasting with the more monolithic approaches typical in on-premise environments.

Career Pathways: On-premise Development Roles

On-premise development roles typically involve designing, deploying, and maintaining software applications within an organization's local infrastructure, requiring expertise in server management, network security, and database administration. Professionals in this career path often hold titles such as Systems Engineer, Infrastructure Developer, or DevOps Engineer, emphasizing strong knowledge of on-site hardware and traditional software stacks. Mastery of virtualization technologies, scripting languages, and experience with legacy systems is crucial for advancing in on-premise development careers.

Emerging Opportunities in Edge Computing Careers

Edge computing development creates emerging career opportunities by enabling real-time data processing closer to source devices, reducing latency and bandwidth use compared to traditional on-premise development. Professionals skilled in distributed computing architectures, IoT integration, and AI implementation are in high demand to design scalable edge solutions. The growth of industries like autonomous vehicles, smart cities, and industrial automation further drives the need for expertise in edge computing over conventional on-premise processing models.

Challenges and Advantages: On-premise vs Edge Computing Projects

On-premise development offers robust security and centralized control but faces challenges related to scalability, high upfront costs, and limited real-time processing capabilities. Edge computing development enhances real-time data processing, reduces latency, and improves bandwidth efficiency by processing data closer to the source, yet it encounters challenges in managing distributed infrastructure, ensuring data consistency, and maintaining security across multiple edge devices. Choosing between on-premise and edge computing solutions depends on specific project requirements such as latency sensitivity, data volume, and infrastructure management capabilities.

Impact on Job Market: Shifts in Development Demands

On-premise development requires specialized IT infrastructure maintenance, leading to steady demand for in-house developers with expertise in local server management. Edge computing development drives growth in skills related to distributed systems, IoT integration, and real-time data processing, creating new job roles in network optimization and cybersecurity. The shift towards edge computing emphasizes demand for developers proficient in microservices, containerization, and low-latency application architectures, reshaping workforce requirements across industries.

Future Trends in Processing Solutions Development

Future trends in processing solutions development emphasize the integration of edge computing to enhance real-time data processing and reduce latency in on-premise environments. Hybrid architectures combining on-premise development with distributed edge computing nodes enable scalable, secure, and efficient data management tailored to IoT and AI applications. Emerging solutions prioritize low-latency processing, localized data analytics, and improved bandwidth utilization to meet growing demands in smart cities, autonomous systems, and Industry 4.0 deployments.

Upskilling for Success: Preparing for Both On-premise and Edge Computing

Upskilling for success in development requires mastering both on-premise and edge computing technologies to handle diverse processing needs efficiently. Developers must gain expertise in on-premise infrastructure management, ensuring robust security and scalability, while also acquiring skills in edge computing to optimize low-latency data processing and real-time analytics at the network edge. Emphasizing hybrid proficiency enables development teams to design versatile solutions that balance centralized control with decentralized processing capabilities.

Related Important Terms

Cloud-to-Edge Continuum

On-premise development offers localized control and low-latency processing ideal for sensitive data handling, while edge computing development extends processing capabilities closer to data sources, optimizing real-time analytics and reducing cloud dependency. Integrating these within the cloud-to-edge continuum enables hybrid architectures that balance scalability, security, and performance across distributed environments.

Federated Edge Analytics

Federated Edge Analytics enhances edge computing development by enabling decentralized data processing across multiple on-premise nodes, reducing latency and improving data privacy compared to traditional centralized on-premise development models. This approach leverages local computation at edge devices while coordinating analytics insights collaboratively, optimizing real-time decision-making in distributed processing solutions.

Edge Microservices Orchestration

Edge microservices orchestration enables decentralized processing by managing containerized services closer to data sources, reducing latency and improving real-time decision-making compared to traditional on-premise development. This approach leverages distributed computing power and dynamic resource allocation, optimizing performance for IoT and edge devices in processing solutions.

On-Prem to Edge Migration

Migrating from on-premise development to edge computing development enhances data processing speed by decentralizing computational resources closer to data sources, reducing latency and bandwidth usage. This transition supports real-time analytics and IoT applications by leveraging edge nodes, which complements traditional centralized on-premise infrastructures for hybrid processing solutions.

AI Model Quantization at Edge

On-premise development offers centralized infrastructure with control over data security for AI model training, while edge computing development enables real-time AI model quantization and inference closer to data sources, reducing latency and bandwidth usage. AI model quantization at the edge optimizes computational efficiency by compressing models to fit limited hardware resources without significant loss in accuracy, enhancing deployment in IoT devices and autonomous systems.

Distributed Data Ingestion Pipelines

On-premise development offers centralized control with robust security for distributed data ingestion pipelines, enabling low-latency processing within local infrastructure. Edge computing development enhances real-time data processing by distributing ingestion tasks closer to data sources, reducing bandwidth usage and improving scalability across geographically dispersed nodes.

Zero Trust Security at Edge

On-premise development provides robust control over infrastructure, but Edge Computing development enhances processing solutions by enabling Zero Trust Security principles directly at the data source, minimizing attack surfaces and enforcing strict access controls. Implementing Zero Trust at the edge ensures continuous verification of devices and users, reducing risks associated with data transmission and supporting real-time, secure decision-making in distributed environments.

Real-time Edge Inferencing

On-premise development offers controlled environments for stable processing but often faces latency challenges in real-time edge inferencing compared to edge computing development, which processes data directly on devices near the data source, reducing latency and enabling faster decision-making. Real-time edge inferencing benefits from local data processing, improved bandwidth efficiency, and enhanced privacy by minimizing cloud dependency in critical applications like autonomous vehicles and industrial automation.

On-Prem Latency Optimization

On-premise development offers superior latency optimization by processing data locally, minimizing network delays compared to edge computing, which may still experience variable latency due to distributed infrastructure. Leveraging dedicated on-site hardware and real-time data access ensures faster response times critical for latency-sensitive applications in industries like manufacturing and finance.

Edge-Driven Micro-Deployment

Edge-driven micro-deployment enables processing solutions to operate closer to data sources, reducing latency and bandwidth usage compared to traditional on-premise development. This approach enhances real-time analytics and scalability by distributing microservices across localized edge devices, optimizing performance in IoT and distributed environments.

On-premise Development vs Edge Computing Development for processing solutions. Infographic

hrdif.com

hrdif.com