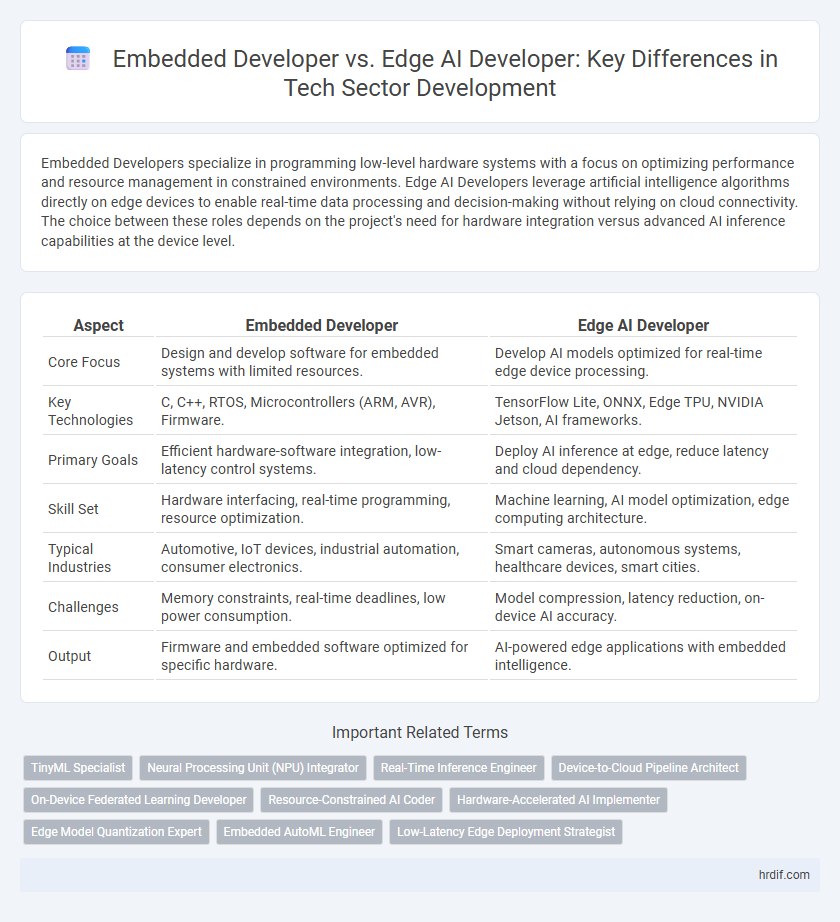

Embedded Developers specialize in programming low-level hardware systems with a focus on optimizing performance and resource management in constrained environments. Edge AI Developers leverage artificial intelligence algorithms directly on edge devices to enable real-time data processing and decision-making without relying on cloud connectivity. The choice between these roles depends on the project's need for hardware integration versus advanced AI inference capabilities at the device level.

Table of Comparison

| Aspect | Embedded Developer | Edge AI Developer |

|---|---|---|

| Core Focus | Design and develop software for embedded systems with limited resources. | Develop AI models optimized for real-time edge device processing. |

| Key Technologies | C, C++, RTOS, Microcontrollers (ARM, AVR), Firmware. | TensorFlow Lite, ONNX, Edge TPU, NVIDIA Jetson, AI frameworks. |

| Primary Goals | Efficient hardware-software integration, low-latency control systems. | Deploy AI inference at edge, reduce latency and cloud dependency. |

| Skill Set | Hardware interfacing, real-time programming, resource optimization. | Machine learning, AI model optimization, edge computing architecture. |

| Typical Industries | Automotive, IoT devices, industrial automation, consumer electronics. | Smart cameras, autonomous systems, healthcare devices, smart cities. |

| Challenges | Memory constraints, real-time deadlines, low power consumption. | Model compression, latency reduction, on-device AI accuracy. |

| Output | Firmware and embedded software optimized for specific hardware. | AI-powered edge applications with embedded intelligence. |

Role Overview: Embedded Developer vs Edge AI Developer

Embedded Developers design and optimize software directly interfacing with hardware for real-time applications, focusing on microcontrollers and low-level programming in C or Assembly. Edge AI Developers integrate machine learning models at the network edge, enabling real-time data processing and decision-making on devices with limited computational resources, utilizing frameworks like TensorFlow Lite or PyTorch Mobile. While Embedded Developers prioritize hardware-software integration and resource efficiency, Edge AI Developers emphasize AI model deployment and optimization for latency-sensitive, inference-driven tasks on edge devices.

Core Skill Sets in Embedded and Edge AI Development

Embedded developers specialize in low-level programming languages such as C and assembly, focusing on real-time operating systems, hardware interfacing, and resource-constrained environments to optimize performance and power consumption. Edge AI developers require expertise in AI model deployment, containerization (e.g., Docker), and frameworks like TensorFlow Lite or OpenVINO for running machine learning algorithms on edge devices with limited compute. Both roles demand proficiency in sensor integration, firmware development, and debugging embedded systems, but Edge AI developers emphasize data processing and inference capabilities at the network edge for latency reduction and enhanced decision-making.

Typical Job Responsibilities: A Comparative Analysis

Embedded Developers primarily focus on designing, coding, and testing software for microcontroller-based systems, ensuring efficient hardware-software integration and real-time performance. Edge AI Developers work on implementing machine learning models directly on edge devices, optimizing algorithms for low latency, power efficiency, and limited computational resources. Both roles require proficiency in embedded systems, but Edge AI Developers emphasize AI model deployment and data processing at the network edge to enable intelligent decision-making without reliance on cloud connectivity.

Essential Tools and Technologies: Embedded vs Edge AI

Embedded Developers primarily utilize microcontrollers, real-time operating systems (RTOS) such as FreeRTOS, and languages like C/C++ for hardware-level programming and system optimization. Edge AI Developers rely on frameworks like TensorFlow Lite and NVIDIA Jetson platforms, integrating AI models directly on edge devices to enable real-time data processing and decision-making. Both roles require proficiency in sensor interfacing and low-latency communication protocols, but Edge AI development emphasizes machine learning toolchains and GPU acceleration for on-device inference.

Industry Demand and Recruitment Trends

Embedded developers traditionally focus on firmware and hardware integration in industries like automotive and consumer electronics, leading to steady demand driven by IoT expansion. Edge AI developers specialize in deploying machine learning models on edge devices, experiencing rapid growth due to increased adoption of real-time analytics and AI-driven automation in sectors such as manufacturing and smart cities. Recruitment trends indicate a sharper rise in demand for Edge AI skills, with companies prioritizing candidates adept in both AI frameworks and embedded systems for enhanced device intelligence.

Career Growth Opportunities in Both Fields

Embedded Developers benefit from steady demand in IoT, automotive, and consumer electronics sectors, with career growth driven by advancements in low-level hardware integration and real-time systems optimization. Edge AI Developers experience rapid expansion due to the rising adoption of AI-powered devices requiring on-device processing, offering opportunities in machine learning model deployment, edge computing infrastructure, and cross-disciplinary innovation. Both fields provide strong career trajectories, with Embedded Developers excelling in hardware-software co-design and Edge AI Developers leading in intelligent system development and AI integration at the network edge.

Salary Comparison: Embedded Developers vs Edge AI Developers

Embedded developers typically earn between $80,000 and $120,000 annually, reflecting the demand for expertise in low-level programming and hardware integration. Edge AI developers command higher salaries, often ranging from $110,000 to $150,000, due to their specialized skills in deploying artificial intelligence models on edge devices. The rising adoption of AI-enabled edge computing drives salary growth in the Edge AI developer role, positioning it as a lucrative career path in the tech sector.

Project Types and Real-World Applications

Embedded Developers primarily work on firmware and hardware integration projects for IoT devices, automotive systems, and consumer electronics, focusing on optimizing software for constrained environments. Edge AI Developers design and deploy machine learning models on edge devices, enabling real-time data processing and decision-making in applications such as smart cameras, industrial automation, and autonomous vehicles. Both roles are critical in advancing the tech sector by delivering efficient, low-latency solutions tailored to specific hardware and use-case requirements.

Learning Paths and Certification Requirements

Embedded Developers typically focus on mastering microcontroller programming, real-time operating systems, and low-level hardware interfacing, with certifications like Certified Embedded Systems Engineer (CESE) emphasizing hardware-software integration skills. Edge AI Developers prioritize expertise in machine learning model optimization, edge computing frameworks, and data deployment on constrained devices, often requiring certifications such as AWS Certified Machine Learning - Specialty or NVIDIA Deep Learning AI certifications. Both roles demand continuous learning through specialized courses and practical experience, but Edge AI development leans heavily on AI frameworks and cloud-edge synergy, while Embedded Development centers on embedded systems and firmware proficiency.

Future Prospects: Evolution of Embedded and Edge AI Roles

Embedded developers will increasingly integrate AI capabilities directly into hardware, enhancing device autonomy and efficiency in sectors such as IoT and automotive. Edge AI developers focus on deploying machine learning models locally on edge devices, reducing latency and improving real-time data processing for applications like smart cities and industrial automation. The convergence of embedded systems and edge AI heralds a future where developers must master both hardware constraints and advanced AI algorithms to drive innovation in the evolving tech landscape.

Related Important Terms

TinyML Specialist

Embedded Developers specialize in designing and optimizing firmware for microcontrollers, ensuring low-power and efficient performance in constrained hardware environments. Edge AI Developers, particularly TinyML Specialists, focus on deploying machine learning models on resource-limited edge devices, enabling real-time AI inference with minimal latency and power consumption in IoT applications.

Neural Processing Unit (NPU) Integrator

Embedded Developers focus on integrating Neural Processing Units (NPUs) at the hardware-software interface to optimize low-level embedded systems for real-time AI inference. Edge AI Developers leverage NPUs to deploy advanced machine learning models on edge devices, enhancing localized data processing and reducing latency in AI-driven applications within the tech sector.

Real-Time Inference Engineer

An Embedded Developer specializes in designing and optimizing software for hardware-constrained environments, ensuring efficient low-level code execution on microcontrollers or ASICs, while an Edge AI Developer focuses on deploying advanced machine learning models for real-time inference on edge devices with limited resources. Real-Time Inference Engineers bridge these roles by optimizing AI algorithms and embedded systems to deliver low-latency decision-making in applications such as autonomous vehicles, IoT, and smart cameras.

Device-to-Cloud Pipeline Architect

Embedded Developers specialize in low-level programming and hardware integration essential for optimizing device performance, while Edge AI Developers focus on deploying machine learning models at the network edge to enable real-time data processing and analytics. The Device-to-Cloud Pipeline Architect designs seamless data flows from embedded systems through edge AI platforms to cloud infrastructures, ensuring efficient, scalable, and secure end-to-end connectivity in IoT and AI-driven environments.

On-Device Federated Learning Developer

On-device federated learning developers specialize in creating privacy-preserving machine learning models that train directly on edge devices, reducing latency and enhancing data security without relying on centralized servers. Embedded developers focus on integrating hardware and software systems to optimize device performance, but edge AI developers uniquely combine these skills with advanced AI algorithms to enable real-time, decentralized intelligence on resource-constrained devices.

Resource-Constrained AI Coder

Embedded developers specialize in creating efficient, low-level software for resource-constrained systems, optimizing code to run AI models on microcontrollers and limited hardware. Edge AI developers focus on deploying intelligent algorithms at the network edge, balancing model complexity with real-time performance and minimal power consumption on devices like IoT sensors and embedded GPUs.

Hardware-Accelerated AI Implementer

Embedded Developers specialize in integrating software with hardware platforms to optimize system performance, while Edge AI Developers focus on deploying AI models directly on edge devices using hardware acceleration such as FPGAs and GPUs to achieve low-latency processing. Hardware-accelerated AI implementation drives advancements in real-time analytics and autonomous systems by leveraging specific AI inference engines embedded within edge hardware.

Edge Model Quantization Expert

An Edge AI Developer specializing in edge model quantization optimizes AI models to run efficiently on low-power, resource-constrained devices, enabling real-time inference at the network edge. Unlike traditional Embedded Developers who focus on firmware and hardware integration, Edge AI Developers leverage model compression techniques such as quantization and pruning to minimize latency and power consumption while maintaining accuracy in AI-driven applications.

Embedded AutoML Engineer

Embedded AutoML Engineers specialize in designing machine learning models optimized for deployment on resource-constrained devices, bridging the gap between Embedded Developers and Edge AI Developers by integrating automated model selection and tuning directly into embedded systems. Their expertise in AutoML accelerates efficient real-time AI inference on edge devices, enhancing performance and reducing latency critical for automotive and IoT applications.

Low-Latency Edge Deployment Strategist

An Embedded Developer specializes in optimizing hardware-level performance for real-time systems, ensuring minimal latency through efficient code on microcontrollers and SoCs. An Edge AI Developer focuses on deploying machine learning models near data sources, leveraging edge computing to deliver ultra-low-latency AI inference critical for applications such as autonomous vehicles and industrial automation.

Embedded Developer vs Edge AI Developer for tech sector. Infographic

hrdif.com

hrdif.com