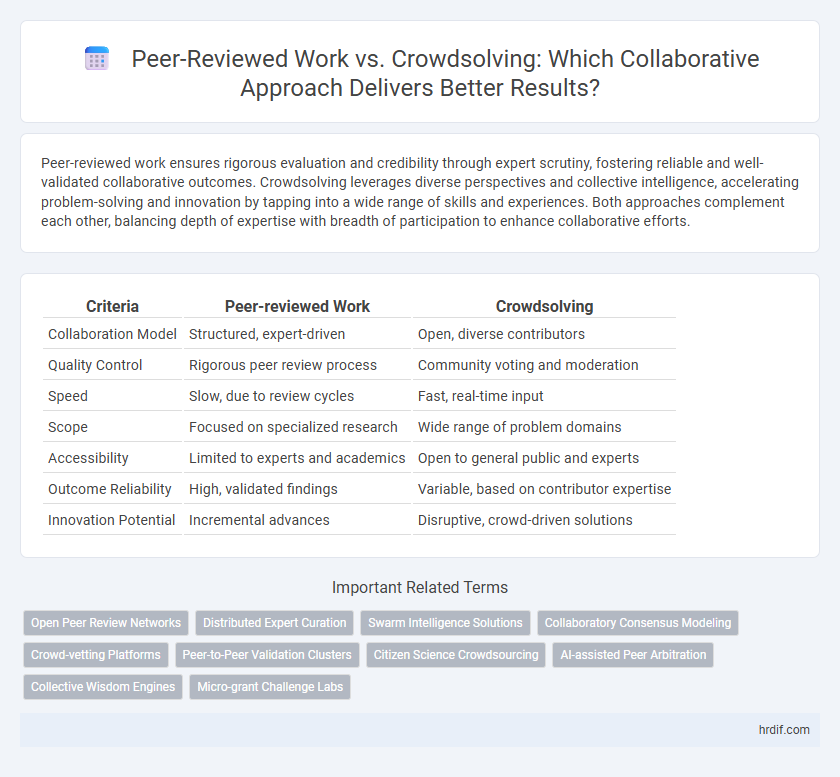

Peer-reviewed work ensures rigorous evaluation and credibility through expert scrutiny, fostering reliable and well-validated collaborative outcomes. Crowdsolving leverages diverse perspectives and collective intelligence, accelerating problem-solving and innovation by tapping into a wide range of skills and experiences. Both approaches complement each other, balancing depth of expertise with breadth of participation to enhance collaborative efforts.

Table of Comparison

| Criteria | Peer-reviewed Work | Crowdsolving |

|---|---|---|

| Collaboration Model | Structured, expert-driven | Open, diverse contributors |

| Quality Control | Rigorous peer review process | Community voting and moderation |

| Speed | Slow, due to review cycles | Fast, real-time input |

| Scope | Focused on specialized research | Wide range of problem domains |

| Accessibility | Limited to experts and academics | Open to general public and experts |

| Outcome Reliability | High, validated findings | Variable, based on contributor expertise |

| Innovation Potential | Incremental advances | Disruptive, crowd-driven solutions |

Understanding Peer-Reviewed Work in Collaborative Environments

Peer-reviewed work ensures rigor and credibility by subjecting research to expert evaluation, fostering trust and reliability in collaborative environments. This process emphasizes methodological soundness and collective accountability, crucial for long-term knowledge building among teams. Understanding peer-review mechanisms enhances collaboration by aligning contributors with established academic standards and promoting transparent, high-quality outputs.

Defining Crowdsolving and Its Role in Modern Collaboration

Crowdsolving harnesses the collective intelligence of diverse participants through open calls, enabling rapid problem-solving and innovation beyond traditional peer-reviewed methods. Unlike peer-reviewed work, which relies on expert evaluation and formal validation, crowdsolving emphasizes real-time collaboration and iterative feedback from a broad community. This approach accelerates discovery and democratizes contribution, making it a pivotal model for modern collaborative efforts in research and development.

Key Differences Between Peer-Reviewed and Crowdsolving Models

Peer-reviewed work emphasizes rigorous evaluation by domain experts to ensure accuracy, credibility, and adherence to established standards, often resulting in slower but highly reliable outcomes. Crowdsolving leverages diverse, large-scale participation for rapid ideation and problem-solving, benefiting from varied perspectives but with less formal validation of results. The key difference lies in peer review's structured quality control versus crowdsolving's speed and inclusivity in collaborative innovation.

Quality Assurance: Peer Review vs Crowd Wisdom

Peer-reviewed work ensures quality assurance through rigorous evaluation by experts, maintaining accuracy and scholarly standards in collaborative projects. Crowdsolving leverages diverse perspectives from large groups, enhancing innovation but potentially compromising consistency and depth of quality control. Balancing peer review's structured validation with crowd wisdom's broad input can optimize the reliability and creativity of collaborative efforts.

Speed and Scalability: Which Model Accelerates Problem Solving?

Peer-reviewed work ensures rigorous evaluation but often suffers from slower turnaround times due to in-depth analysis and formal publication processes. Crowdsolving accelerates problem solving by leveraging large, diverse participant pools that provide rapid and scalable contributions simultaneously. Scalability in crowdsolving platforms enhances speed significantly, making them more effective for urgent or complex problems requiring broad input.

Inclusivity and Diversity of Input: Comparing Both Approaches

Peer-reviewed work typically involves selected experts and maintains rigorous standards, which can limit diversity but ensures depth and quality of input. Crowdsolving leverages a broad, inclusive range of participants from diverse backgrounds, enhancing creativity and solution variety through collective intelligence. Inclusivity in crowdsolving fosters innovation by integrating multiple perspectives, whereas peer-reviewed work emphasizes specialized insights for validated outcomes.

Intellectual Property and Recognition in Collaborative Contexts

Peer-reviewed work ensures clear intellectual property rights and formal recognition through established academic channels, safeguarding contributors' authorship and credit. Crowdsolving platforms often face challenges in attributing individual contributions, leading to ambiguous ownership and limited formal recognition. Effective collaboration requires balancing transparent IP management with mechanisms that acknowledge diverse participant inputs.

Application Suitability: When to Choose Peer Review or Crowdsolving

Peer-reviewed work excels in fields requiring rigorous validation and high accuracy, such as academic research and clinical studies, ensuring credibility through expert evaluation. Crowdsolving proves effective for complex, open-ended problems in innovation-driven industries and large-scale data analysis, leveraging diverse perspectives for creative solutions. Choosing between peer review and crowdsolving depends on the need for authoritative verification versus collective intelligence and speed in problem-solving.

Impact on Career Growth and Professional Credibility

Peer-reviewed work enhances professional credibility by ensuring rigorous validation of research, which is highly valued in academia and specialized industries, directly influencing career advancement through recognized publications. Crowdsolving fosters collaborative problem-solving across diverse expertise, accelerating innovation and showcasing adaptability, traits increasingly prized in dynamic professional environments. Balancing peer-reviewed achievements with crowdsolving participation can optimize career growth by merging formal recognition with practical, real-world impact.

Future Trends: The Evolving Landscape of Collaborative Methods

Peer-reviewed work remains the gold standard for validating accuracy and credibility in collaborative research, while crowdsolving harnesses large, diverse groups to accelerate innovation and problem-solving. Future trends indicate a hybrid approach integrating rigorous peer review with real-time crowd input, leveraging AI to filter and enhance contributions for efficient knowledge synthesis. This evolving landscape promotes inclusivity and scalability, driving breakthroughs across scientific, technological, and social domains.

Related Important Terms

Open Peer Review Networks

Open peer review networks enhance collaboration by enabling transparent, iterative evaluation from a diverse group of experts, increasing the reliability and inclusivity of peer-reviewed work. Crowdsolving leverages collective intelligence and real-time feedback, accelerating the problem-solving process while complementing traditional peer review through broader participation and diverse perspectives.

Distributed Expert Curation

Distributed expert curation in peer-reviewed work ensures rigorous evaluation and validation by specialized professionals, enhancing the accuracy and credibility of collaborative outputs. Crowdsolving leverages diverse perspectives to generate innovative solutions rapidly but may lack the structured quality control inherent in expert-driven peer review.

Swarm Intelligence Solutions

Peer-reviewed work ensures rigorous validation and credibility in collaborative projects, while crowdsolving leverages swarm intelligence solutions to harness diverse expertise rapidly and adaptively. Swarm intelligence algorithms such as ant colony optimization and particle swarm optimization exemplify how decentralized collaboration can solve complex problems efficiently.

Collaboratory Consensus Modeling

Collaboratory consensus modeling leverages the structured rigor of peer-reviewed work and the diverse input of crowdsolving to enhance collaborative problem-solving accuracy and innovation. Integrating expert validation with collective intelligence accelerates consensus-building and improves model robustness in complex research environments.

Crowd-vetting Platforms

Crowd-vetting platforms leverage diverse, real-time input from a broad community, enabling faster validation and refinement of ideas compared to traditional peer-reviewed work, which often involves lengthy review cycles by a limited number of experts. This dynamic approach enhances collaboration by democratizing knowledge assessment and accelerating innovation through collective intelligence.

Peer-to-Peer Validation Clusters

Peer-reviewed work relies on structured peer-to-peer validation clusters where experts critically assess research for accuracy and reliability within specific academic communities. Crowdsolving leverages diverse, large-scale collaborative inputs but lacks the targeted expert validation clusters that ensure the rigor and credibility inherent in peer-reviewed processes.

Citizen Science Crowdsourcing

Peer-reviewed work ensures rigorous evaluation by experts, enhancing the credibility and reliability of scientific collaboration, whereas crowdsolving in citizen science leverages diverse, large-scale public participation to accelerate data collection and problem-solving. Combining both approaches optimizes collaboration by integrating validated research methods with the expansive, real-time contributions from global crowdsourcing platforms.

AI-assisted Peer Arbitration

AI-assisted peer arbitration enhances collaboration by combining the rigor of peer-reviewed work with the diverse input of crowdsolving, ensuring quality control and faster consensus-building. This approach leverages advanced algorithms to evaluate contributions, resolve conflicts, and prioritize solutions based on expert validation and community insights.

Collective Wisdom Engines

Peer-reviewed work ensures rigorous validation through expert evaluation, fostering high-quality collaboration by leveraging specialized knowledge and structured feedback. Crowdsolving harnesses diverse perspectives and collective intelligence in real-time, enhancing innovation and scalability in Collective Wisdom Engines by aggregating broad user input efficiently.

Micro-grant Challenge Labs

Peer-reviewed work ensures rigorous evaluation and credibility through expert scrutiny, fostering high-quality research outputs in Micro-grant Challenge Labs. Crowdsolving leverages diverse perspectives and rapid idea generation, accelerating collaborative innovation and problem-solving within these labs.

Peer-reviewed Work vs Crowdsolving for collaboration. Infographic

hrdif.com

hrdif.com