Panel interviews provide a structured environment where multiple interviewers collaboratively assess a candidate's skills, ensuring diverse perspectives and consistent evaluation criteria. Crowd-sourced interviews leverage feedback from a broader audience, offering varied insights but potentially lacking the focused expertise of a specialized panel. Both methods aim to enhance candidate evaluation accuracy, with panel interviews excelling in depth and crowd-sourced approaches delivering breadth.

Table of Comparison

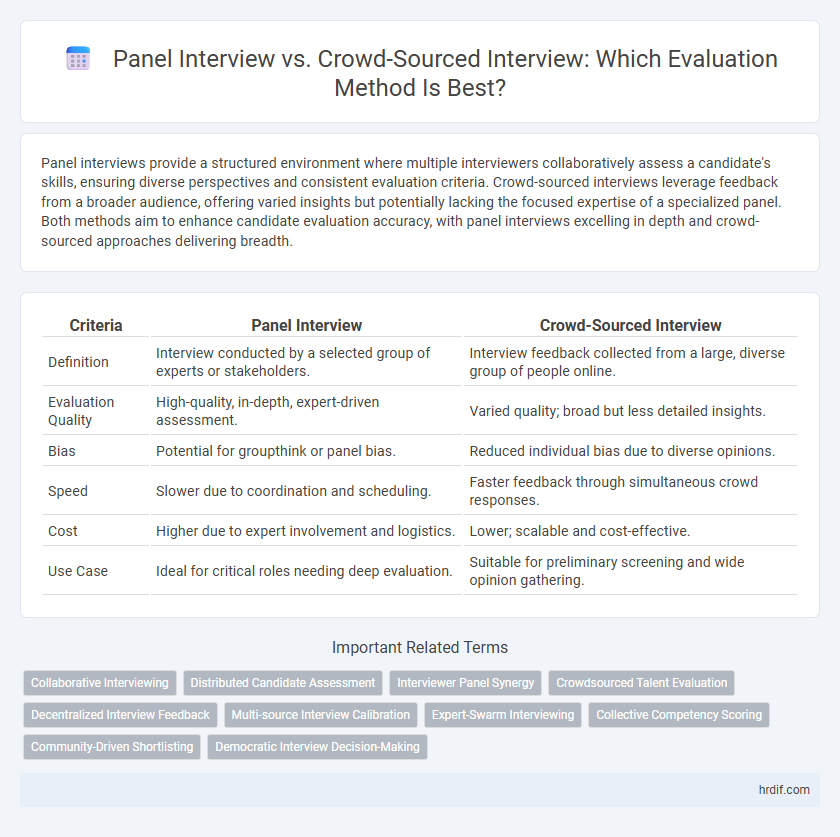

| Criteria | Panel Interview | Crowd-Sourced Interview |

|---|---|---|

| Definition | Interview conducted by a selected group of experts or stakeholders. | Interview feedback collected from a large, diverse group of people online. |

| Evaluation Quality | High-quality, in-depth, expert-driven assessment. | Varied quality; broad but less detailed insights. |

| Bias | Potential for groupthink or panel bias. | Reduced individual bias due to diverse opinions. |

| Speed | Slower due to coordination and scheduling. | Faster feedback through simultaneous crowd responses. |

| Cost | Higher due to expert involvement and logistics. | Lower; scalable and cost-effective. |

| Use Case | Ideal for critical roles needing deep evaluation. | Suitable for preliminary screening and wide opinion gathering. |

Understanding Panel Interviews: Structure and Purpose

Panel interviews involve a structured format where multiple interviewers collaboratively assess a candidate's skills, experience, and cultural fit in real-time, ensuring a comprehensive evaluation from diverse perspectives. Each panel member typically focuses on specific competencies, allowing for targeted questioning and immediate consensus-building. This method enhances decision accuracy by leveraging collective expertise and facilitating dynamic exchanges between interviewers and candidates.

What Is a Crowd-Sourced Interview?

A crowd-sourced interview leverages input from multiple evaluators who independently assess candidates, providing diverse perspectives and reducing individual biases. Unlike traditional panel interviews where a fixed group interacts directly, crowd-sourced methods gather feedback asynchronously, often via digital platforms, enhancing scalability and objectivity. This approach harnesses collective intelligence to improve decision-making accuracy in candidate evaluation.

Key Differences Between Panel and Crowd-Sourced Interviews

Panel interviews involve a fixed group of experts assessing candidates simultaneously, ensuring consistent evaluation criteria and direct interaction to gauge responses effectively. Crowd-sourced interviews leverage a diverse pool of evaluators from various backgrounds, increasing the breadth of perspectives but potentially reducing consistency in assessment standards. The primary differences lie in the controlled environment and depth of feedback seen in panel interviews versus the scalability and diversity of insights found in crowd-sourced evaluations.

Advantages of Panel Interviews in Candidate Evaluation

Panel interviews enhance candidate evaluation by providing diverse expert perspectives, reducing interviewer bias, and facilitating a comprehensive assessment of skills and cultural fit. The structured format enables real-time discussion among panelists, ensuring critical evaluation across multiple competencies. This approach increases the reliability and validity of hiring decisions compared to crowd-sourced interviews.

Benefits of Crowd-Sourced Interviews for Hiring Decisions

Crowd-sourced interviews leverage diverse perspectives from multiple evaluators, enhancing the objectivity and accuracy of candidate assessments. This approach reduces individual biases common in panel interviews by aggregating feedback from various stakeholders, resulting in more balanced hiring decisions. Moreover, crowd-sourcing accelerates the evaluation process through parallel candidate reviews, improving overall recruitment efficiency.

Challenges and Limitations of Panel Interviews

Panel interviews often face challenges such as potential bias from dominant panel members, limited perspectives restricted to the panel's expertise, and logistical difficulties in coordinating schedules. The controlled environment might inhibit candidate openness, leading to less authentic responses. Limited diversity in panel composition can result in narrow evaluation criteria, reducing the overall accuracy of candidate assessment.

Potential Drawbacks of Crowd-Sourced Interview Approaches

Crowd-sourced interview approaches may lead to inconsistent evaluation criteria due to the varied perspectives and expertise levels of participants, which can compromise the reliability of candidate assessments. The lack of standardized training for crowd evaluators often results in biases and subjective judgments, undermining the validity of the interview outcomes. Furthermore, managing and analyzing large volumes of feedback can be time-consuming and may dilute the focus on key candidate competencies.

Impact on Candidate Experience: Panel vs. Crowd-Sourced

Panel interviews offer candidates a structured environment with immediate feedback from multiple experts, enhancing clarity and reducing anxiety through consistent questioning. Crowd-sourced interviews leverage diverse perspectives but may overwhelm candidates due to varied question styles and fragmented evaluation. The balance of personalized interaction in panel interviews generally leads to a more positive and coherent candidate experience compared to the dispersed nature of crowd-sourced evaluations.

Which Evaluation Method Suits Your Organization?

Panel interviews provide structured insights from experienced evaluators, ensuring consistency and depth in candidate assessment. Crowd-sourced interviews leverage diverse perspectives and broader feedback, enhancing objectivity and uncovering various skill dimensions. Organizations with specialized roles benefit from panel interviews, while those seeking wide-ranging input for cultural fit and innovation thrive with crowd-sourced evaluations.

Panel Interview or Crowd-Sourced: Choosing the Right Fit

Panel interviews offer structured evaluation by a select group of experts, ensuring consistent assessment criteria and in-depth candidate analysis. Crowd-sourced interviews gather diverse perspectives from a broader audience, increasing impartiality but potentially reducing focus on specialized skills. Choosing between panel and crowd-sourced formats depends on the job complexity, required expertise, and desired evaluation objectivity.

Related Important Terms

Collaborative Interviewing

Panel interviews leverage a curated group of experts to collaboratively evaluate candidates, enabling diverse perspectives and structured questioning that enhance decision-making accuracy. Crowd-sourced interviews aggregate feedback from a broader, often remote, pool of evaluators, offering scalability and varied insights but potentially sacrificing consistency and depth in collaborative assessment.

Distributed Candidate Assessment

Panel interviews concentrate evaluation through a select group of experts, ensuring focused and consistent feedback on candidates. Crowd-sourced interviews leverage diverse input from multiple evaluators, distributing candidate assessment to capture varied perspectives and reduce individual bias.

Interviewer Panel Synergy

Panel interviews create interviewer synergy by allowing diverse experts to collaboratively evaluate candidates, enhancing the accuracy of assessments through real-time discussion and consensus-building. Crowd-sourced interviews leverage a wider range of perspectives asynchronously but may lack the immediate dynamic interaction that strengthens judgment alignment in panel settings.

Crowdsourced Talent Evaluation

Crowdsourced talent evaluation leverages a diverse pool of evaluators to assess candidates, ensuring broader perspectives and reducing individual biases compared to traditional panel interviews. This method enhances scalability and data-rich insights, improving the accuracy and fairness of candidate assessments.

Decentralized Interview Feedback

Decentralized interview feedback in panel interviews allows structured, consistent evaluation from a fixed group of experts, enhancing accountability and depth of insights. Crowd-sourced interviews leverage diverse perspectives from multiple stakeholders, increasing evaluation breadth and reducing individual bias through collective input.

Multi-source Interview Calibration

Panel interviews offer a structured approach to candidate evaluation by consolidating insights from multiple experts, enhancing consistency through real-time calibration of assessments. Crowd-sourced interviews leverage diverse perspectives from a broader evaluator pool, increasing the reliability of multi-source feedback while requiring robust mechanisms to align scoring criteria and reduce bias.

Expert-Swarm Interviewing

Expert-Swarm Interviewing leverages a diverse, crowd-sourced panel of specialists to evaluate candidates, enhancing decision accuracy through aggregated expertise rather than relying on a limited, traditional panel. This method boosts assessment reliability by integrating multiple expert perspectives, reducing individual biases and enabling a more comprehensive candidate evaluation.

Collective Competency Scoring

Panel interviews leverage experts to provide nuanced Collective Competency Scoring, enabling comprehensive assessment through direct interaction and real-time feedback. Crowd-sourced interviews aggregate diverse evaluator insights, increasing scoring reliability and reducing bias by harnessing a broader range of perspectives.

Community-Driven Shortlisting

Community-driven shortlisting leverages diverse insights from a broad panel, enhancing the evaluation accuracy compared to traditional panel interviews where a limited group might overlook nuanced candidate qualities. Crowd-sourced interviews tap into collective intelligence and reduce bias, providing a more holistic assessment of candidates through scalable and democratic feedback mechanisms.

Democratic Interview Decision-Making

Panel interviews enhance democratic decision-making by involving multiple evaluators who provide diverse perspectives and reduce individual bias, leading to a more balanced assessment. Crowd-sourced interviews expand this model by leveraging a broader pool of contributors, increasing input diversity and democratizing evaluation decisions through collective intelligence.

Panel interview vs crowd-sourced interview for evaluation. Infographic

hrdif.com

hrdif.com