Game developers specialize in creating engaging gameplay mechanics, storylines, and character development primarily for traditional platforms, while XR developers focus on immersive technologies like virtual reality (VR) and augmented reality (AR) to craft interactive, spatial experiences. XR development requires expertise in 3D modeling, spatial audio, and real-time rendering to enhance user immersion beyond conventional screen-based interaction. Both roles demand strong programming skills, but XR developers often work closely with hardware integration and sensor technology to push the boundaries of interactive experience design.

Table of Comparison

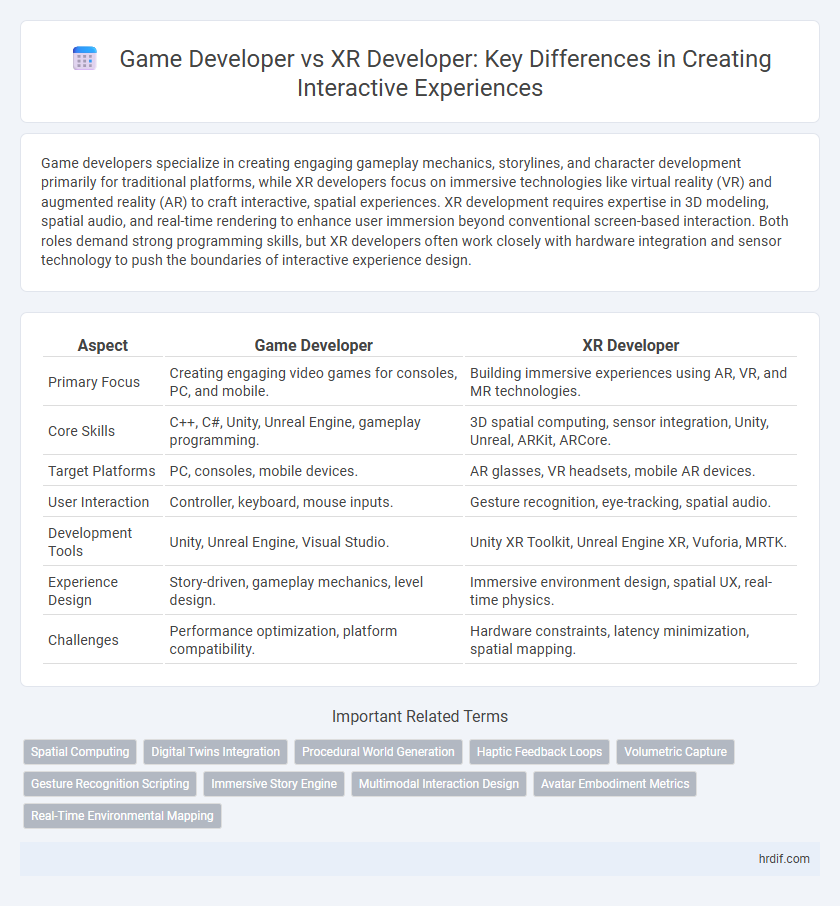

| Aspect | Game Developer | XR Developer |

|---|---|---|

| Primary Focus | Creating engaging video games for consoles, PC, and mobile. | Building immersive experiences using AR, VR, and MR technologies. |

| Core Skills | C++, C#, Unity, Unreal Engine, gameplay programming. | 3D spatial computing, sensor integration, Unity, Unreal, ARKit, ARCore. |

| Target Platforms | PC, consoles, mobile devices. | AR glasses, VR headsets, mobile AR devices. |

| User Interaction | Controller, keyboard, mouse inputs. | Gesture recognition, eye-tracking, spatial audio. |

| Development Tools | Unity, Unreal Engine, Visual Studio. | Unity XR Toolkit, Unreal Engine XR, Vuforia, MRTK. |

| Experience Design | Story-driven, gameplay mechanics, level design. | Immersive environment design, spatial UX, real-time physics. |

| Challenges | Performance optimization, platform compatibility. | Hardware constraints, latency minimization, spatial mapping. |

Introduction: Evolving Roles in Interactive Experience Development

Game developers specialize in creating immersive narratives and mechanics tailored for traditional screens, emphasizing user engagement through storytelling and gameplay design. XR developers focus on blending real and virtual environments using technologies like AR, VR, and MR to craft interactive experiences that integrate spatial computing and sensory inputs. Both roles require proficiency in programming languages such as C# and expertise with platforms like Unity or Unreal Engine to deliver cutting-edge interactive content.

Defining the Game Developer: Core Skills and Responsibilities

Game developers specialize in designing, coding, and testing interactive digital games, combining expertise in programming languages like C++ and Unity with strong knowledge of game mechanics and user experience design. Their core responsibilities include creating engaging gameplay, optimizing performance, and collaborating with artists and designers to produce immersive virtual environments. Mastery of physics engines, AI scripting, and cross-platform integration defines their skill set, crucial for delivering seamless interactive experiences.

Who is an XR Developer? Scope and Expertise

An XR Developer specializes in creating immersive extended reality experiences that integrate virtual reality (VR), augmented reality (AR), and mixed reality (MR) technologies. Their expertise spans 3D modeling, spatial computing, real-time rendering, and sensor integration to build interactive environments that blend digital content with the physical world. Unlike traditional game developers who focus primarily on gameplay mechanics and narrative design, XR developers must ensure seamless hardware-software interaction and user experience across diverse XR platforms.

Key Overlaps: Shared Tools and Technologies

Game developers and XR developers both extensively use Unity and Unreal Engine for creating interactive 3D environments, leveraging powerful physics engines and real-time rendering capabilities. Both fields incorporate C# and C++ programming languages for scripting game mechanics and interactive elements, while also utilizing 3D modeling software like Blender and Maya to design assets. Shared technologies such as spatial audio, motion tracking, and gesture recognition APIs enhance immersive experiences across game and XR development.

Distinct Approaches to User Interaction and Engagement

Game developers prioritize immersive storytelling and responsive gameplay mechanics to create engaging experiences, utilizing established frameworks like Unity and Unreal Engine. XR developers focus on integrating spatial computing and real-world interaction through augmented and virtual reality platforms, enhancing user immersion with gesture recognition and environmental mapping. Both disciplines emphasize user-centric design but diverge in leveraging technology to optimize interaction within virtual versus mixed reality environments.

Technical Stack Comparison: Game vs XR Development

Game developers primarily use engines like Unity, Unreal Engine, and programming languages such as C# and C++ to create immersive 2D and 3D interactive experiences optimized for consoles, PCs, and mobile devices. XR developers leverage similar platforms but integrate specialized SDKs and APIs for augmented reality (AR), virtual reality (VR), and mixed reality (MR), such as ARKit, ARCore, OpenXR, and custom hardware interfaces, emphasizing real-world spatial mapping and sensor input processing. The technical stack for XR development requires deeper expertise in real-time environment tracking, spatial audio, and gesture recognition, differentiating it from the traditional game development pipeline focused on rendering and gameplay mechanics.

Career Pathways: Qualifications and Learning Resources

Game developers typically require proficiency in programming languages such as C++, C#, and Unity, supported by a background in computer science or software engineering; resources like Unity Learn, Unreal Engine tutorials, and specialized game development bootcamps enhance their skills. XR developers need expertise in augmented reality (AR) and virtual reality (VR) platforms, familiarity with 3D modeling software, and knowledge of spatial computing; platforms like Microsoft Mesh, ARKit, and Oculus SDKs, along with courses from Coursera or Udacity, provide targeted learning. Both career paths benefit from strong problem-solving abilities and portfolios demonstrating interactive projects, with continuous learning through online communities and industry events crucial for staying current.

Market Demand and Job Opportunities

The market demand for XR developers is rapidly increasing due to the expansion of augmented and virtual reality applications in gaming, training, and simulation sectors, creating a surge in specialized job opportunities. Game developers continue to have strong job prospects driven by the thriving global gaming industry, which generates billions in revenue annually and requires continuous innovation in game mechanics and storytelling. Companies increasingly seek candidates with XR development skills to deliver immersive interactive experiences, making XR expertise a valuable complement to traditional game development roles.

Salary Trends and Career Growth Potential

Game developers typically earn an average salary ranging from $70,000 to $110,000 annually, driven by the established gaming industry with consistent demand and numerous career advancement opportunities. XR developers command higher salaries, often between $90,000 and $130,000, fueled by growing investment in augmented and virtual reality technologies, creating strong career growth potential in emerging sectors. Both roles benefit from technical expertise and creativity, but XR development offers accelerated career growth due to its integration in diverse fields such as healthcare, education, and enterprise solutions.

Choosing Your Path: Game Development or XR Development?

Game developers specialize in creating immersive gameplay experiences using engines like Unity or Unreal, focusing on mechanics, storytelling, and user engagement across platforms such as PC, consoles, and mobile. XR developers concentrate on augmented reality (AR), virtual reality (VR), and mixed reality (MR) applications, integrating spatial computing, sensor data, and real-world environments to build interactive experiences for devices like Oculus Quest, HoloLens, and Magic Leap. Choosing between game development and XR development depends on your interest in traditional gameplay design versus pioneering spatial interactions and immersive technologies.

Related Important Terms

Spatial Computing

Game Developers excel in crafting immersive narratives and dynamic gameplay using traditional 3D engines, while XR Developers specialize in spatial computing technologies that enable interactive experiences through augmented, virtual, and mixed reality environments. Leveraging advanced sensors, spatial mapping, and real-time environment interaction, XR Developers create seamlessly integrated applications that merge digital content with the physical world, crucial for next-generation spatial computing experiences.

Digital Twins Integration

Game developers primarily focus on creating engaging interactive experiences using traditional 3D engines, while XR developers specialize in immersive environments that integrate digital twins for real-time simulations and spatial accuracy. Digital twins enhance XR projects by providing dynamic, data-driven models that enable precise monitoring, predictive maintenance, and realistic user interactions within virtual and augmented reality frameworks.

Procedural World Generation

Game developers specialize in procedural world generation to create vast, dynamic environments using algorithms that ensure unique gameplay experiences, while XR developers integrate these worlds seamlessly into extended reality platforms, enhancing immersion through real-time spatial mapping and interaction. The synergy between procedural techniques and XR technologies drives innovation in interactive experiences by enabling scalable content creation and responsive user engagement.

Haptic Feedback Loops

Game developers often prioritize immersive storytelling and visual engagement, while XR developers focus on integrating haptic feedback loops to create tactile and responsive interactive experiences. Advanced haptic systems in XR development enhance user immersion by providing real-time physical sensations that synchronize with virtual events.

Volumetric Capture

Game developers create immersive interactive experiences using traditional 3D models and real-time rendering techniques, while XR developers specialize in integrating volumetric capture technology to deliver highly realistic, spatially accurate representations of real-world subjects for augmented and virtual reality environments. Volumetric capture enhances XR experiences by enabling users to interact with true-to-life holograms, bridging the gap between physical presence and digital immersion.

Gesture Recognition Scripting

Game developers often implement gesture recognition scripting using traditional input methods and game engines like Unity or Unreal Engine to create responsive character controls, while XR developers leverage advanced sensor data and spatial computing in platforms like Microsoft HoloLens and Magic Leap to enable natural, immersive interactions through precise hand and body tracking. Mastery of frameworks such as OpenXR and SDKs for Leap Motion or Azure Kinect is essential for XR developers to script seamless gesture-based experiences that enhance user engagement in mixed reality environments.

Immersive Story Engine

Game developers specialize in creating interactive experiences using traditional game engines that emphasize gameplay mechanics and narrative flow, while XR developers focus on building immersive environments utilizing extended reality technologies such as AR, VR, and MR, often integrating spatial computing for enhanced realism. The Immersive Story Engine bridges these disciplines by enabling dynamic storytelling within XR platforms, leveraging advanced spatial audio, real-time rendering, and user interaction models to craft deeply engaging, multisensory narratives.

Multimodal Interaction Design

Game developers specialize in creating immersive digital environments using traditional input devices, focusing on gameplay mechanics and visual storytelling, while XR developers integrate multimodal interaction design, combining voice, gesture, and spatial inputs to create seamless interactive experiences across augmented, virtual, and mixed reality platforms. Expertise in XR development demands understanding of sensor technology, real-time 3D rendering, and user experience optimization to enhance natural and intuitive user interactions beyond conventional game controls.

Avatar Embodiment Metrics

Game developers primarily optimize avatar embodiment metrics through traditional animation and physics-based interactions enhancing player immersion, while XR developers leverage advanced sensor data and real-time body tracking to create highly responsive and lifelike avatar experiences. XR development integrates spatial computing and haptic feedback to improve presence and agency, outperforming conventional game development methods in immersive interaction quality.

Real-Time Environmental Mapping

Game developers primarily focus on real-time environmental mapping to create immersive, dynamic worlds that respond to player interactions within traditional 3D game engines. XR developers leverage advanced spatial computing and sensor fusion techniques for precise real-time environmental mapping, enabling seamless integration of virtual content with physical surroundings in augmented and mixed reality experiences.

Game Developer vs XR Developer for interactive experiences. Infographic

hrdif.com

hrdif.com