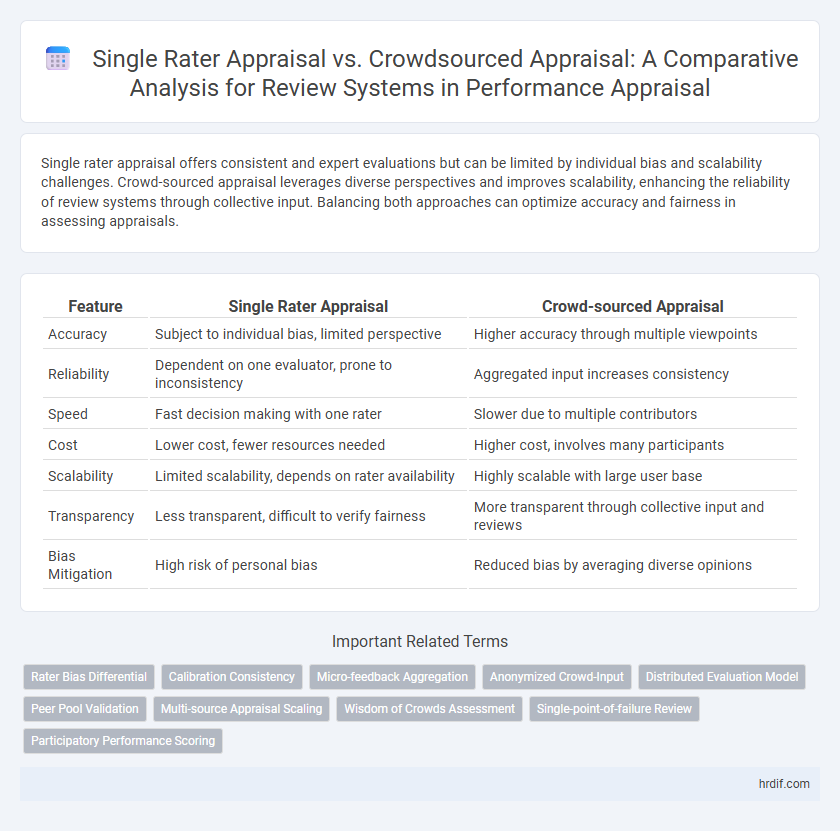

Single rater appraisal offers consistent and expert evaluations but can be limited by individual bias and scalability challenges. Crowd-sourced appraisal leverages diverse perspectives and improves scalability, enhancing the reliability of review systems through collective input. Balancing both approaches can optimize accuracy and fairness in assessing appraisals.

Table of Comparison

| Feature | Single Rater Appraisal | Crowd-sourced Appraisal |

|---|---|---|

| Accuracy | Subject to individual bias, limited perspective | Higher accuracy through multiple viewpoints |

| Reliability | Dependent on one evaluator, prone to inconsistency | Aggregated input increases consistency |

| Speed | Fast decision making with one rater | Slower due to multiple contributors |

| Cost | Lower cost, fewer resources needed | Higher cost, involves many participants |

| Scalability | Limited scalability, depends on rater availability | Highly scalable with large user base |

| Transparency | Less transparent, difficult to verify fairness | More transparent through collective input and reviews |

| Bias Mitigation | High risk of personal bias | Reduced bias by averaging diverse opinions |

Understanding Single Rater and Crowd-sourced Appraisal Systems

Single rater appraisal relies on one individual to evaluate performance, ensuring consistent, in-depth insight but risking subjective bias and limited perspective. Crowd-sourced appraisal gathers input from multiple reviewers, enhancing diversity of opinions and reducing personal bias while increasing reliability and fairness. Both systems serve different needs: single rater appraisals are effective for specialized expertise, whereas crowd-sourced appraisals excel in broad-based evaluation and consensus building.

Methodology: How Each Appraisal Approach Works

Single Rater Appraisal relies on an individual reviewer assessing the subject based on predefined criteria, providing direct and consistent feedback but limited perspectives. Crowd-sourced Appraisal aggregates evaluations from multiple independent contributors, leveraging diverse opinions to enhance accuracy and reduce bias through statistical consensus methods. Both methodologies employ distinct scoring algorithms, with Single Rater focusing on expert judgment and Crowd-sourced utilizing weighted averages or majority voting to derive final ratings.

Accuracy and Objectivity in Performance Evaluations

Single rater appraisal provides consistent feedback but may suffer from individual bias, limiting accuracy and objectivity in performance evaluations. Crowd-sourced appraisal aggregates multiple perspectives, enhancing accuracy by reducing subjective bias and promoting a more objective assessment. Studies show that incorporating diverse evaluators improves the reliability and fairness of review systems in organizational settings.

Bias Reduction: Single Rater vs Crowd-sourced Reviews

Single rater appraisals often introduce individual biases affecting the objectivity of the review system, while crowd-sourced appraisals leverage diverse perspectives, significantly reducing personal bias and enhancing reliability. Crowd-sourced reviews aggregate multiple independent assessments, diluting extreme opinions and providing a more balanced evaluation. Statistical analysis shows crowd-sourcing improves bias mitigation by incorporating varied user data, leading to fairer, more accurate appraisal outcomes.

Scalability and Resource Efficiency

Single rater appraisal offers limited scalability due to dependence on individual expertise, often leading to bottlenecks in high-volume review systems. Crowd-sourced appraisal significantly enhances scalability by leveraging diverse contributors, distributing workload effectively, and reducing review time. Resource efficiency improves as crowd-sourced models minimize reliance on specialized personnel, cutting costs associated with training and evaluation while maintaining accuracy through aggregated judgments.

Employee Perception and Trust in Review Outcomes

Single rater appraisals often result in biased evaluations due to limited perspectives, negatively impacting employee perception and trust in review outcomes. Crowd-sourced appraisals aggregate multiple viewpoints, enhancing fairness and transparency, which significantly boosts employees' confidence in the accuracy of performance assessments. Incorporating diverse feedback in review systems fosters a culture of trust and acceptance, crucial for effective talent management and employee engagement.

Feedback Quality: Depth vs Diversity

Single rater appraisal often provides in-depth, consistent feedback rooted in the evaluator's expert knowledge, enabling detailed insights specifically tailored to the subject matter. Crowd-sourced appraisal enhances feedback diversity by aggregating multiple perspectives, reducing individual bias and uncovering varied aspects of performance or product quality. Balancing depth and diversity in review systems is crucial for comprehensive evaluations, leveraging the strengths of both detailed, expert insights and broad, diverse input.

Implementation Challenges and Best Practices

Single rater appraisal systems face challenges such as potential bias, limited perspectives, and inconsistent evaluations, making it critical to establish clear criteria and extensive rater training. Crowd-sourced appraisal mitigates individual bias by aggregating diverse inputs but requires robust quality control mechanisms, including calibration sessions and weighted scoring algorithms. Best practices emphasize combining both methods where initial crowd-sourced scores undergo expert single rater validation to enhance reliability and fairness in review systems.

Suitability for Various Job Roles and Industries

Single rater appraisal offers focused insight ideal for specialized roles requiring deep expertise, ensuring consistency and accountability in performance evaluation. Crowd-sourced appraisal enhances reliability for roles with diverse responsibilities or customer-facing positions by aggregating multiple perspectives to minimize bias. Industries with uniform tasks, such as manufacturing, benefit from single rater appraisals, while dynamic sectors like retail or hospitality leverage crowd-sourced feedback for a comprehensive assessment.

Future Trends in Appraisal and Review Systems

Future trends in appraisal systems indicate a shift toward hybrid models combining single rater appraisal's focused expertise with crowd-sourced appraisal's diverse insights, enhancing accuracy and fairness. Advances in AI and machine learning are enabling real-time data aggregation from multiple reviewers, improving bias detection and decision reliability. Integration of blockchain technology is expected to increase transparency and security in crowd-sourced appraisals, setting new standards for accountability in review systems.

Related Important Terms

Rater Bias Differential

Single rater appraisal often suffers from higher rater bias differential due to the subjective perceptions and inconsistencies of an individual evaluator, leading to less reliable outcomes. Crowd-sourced appraisal mitigates this bias by aggregating diverse perspectives, resulting in more balanced and objective review systems.

Calibration Consistency

Single rater appraisal often lacks calibration consistency due to individual biases and subjective judgment, while crowd-sourced appraisal leverages diverse perspectives, enhancing reliability and minimizing variance in review scores. Employing crowd-sourced evaluations increases the accuracy and fairness of appraisal systems by balancing out personal biases through aggregated feedback.

Micro-feedback Aggregation

Single rater appraisal often lacks diverse perspectives, leading to potential bias and less reliable evaluations, whereas crowd-sourced appraisal leverages micro-feedback aggregation from multiple users to enhance accuracy and comprehensiveness in review systems. Aggregating numerous micro-feedback inputs enables identification of consistent patterns and reduces individual reviewer noise, improving overall appraisal quality and decision-making.

Anonymized Crowd-Input

Anonymized crowd-input enhances review systems by mitigating individual bias and increasing the diversity of perspectives, leading to more balanced and accurate appraisals compared to single rater appraisal, which often suffers from subjectivity and limited viewpoints. Leveraging multiple anonymous contributors in crowd-sourced appraisals improves reliability and reduces social pressure effects, making the assessment process more transparent and trustworthy.

Distributed Evaluation Model

Single Rater Appraisal centralizes evaluation responsibility on one individual, potentially introducing bias and reducing assessment diversity, whereas Crowd-sourced Appraisal leverages multiple evaluators to enhance reliability and mitigate individual subjectivity. The Distributed Evaluation Model in review systems optimizes accuracy by aggregating diverse feedback, balancing expertise and consensus for more robust performance appraisals.

Peer Pool Validation

Single rater appraisal often faces limitations in accuracy and bias due to reliance on an individual's subjective judgment, whereas crowd-sourced appraisal leverages a diverse peer pool to enhance validation through collective insights and cross-verification. Peer pool validation in crowd-sourced systems strengthens reliability by aggregating multiple perspectives, reducing individual errors, and promoting consensus-driven evaluation outcomes.

Multi-source Appraisal Scaling

Single Rater Appraisal often suffers from subjective bias and limited perspective, whereas Crowd-sourced Appraisal enhances reliability through aggregated inputs from diverse evaluators, optimizing accuracy in Multi-source Appraisal Scaling frameworks. Leveraging data fusion techniques, crowd-sourced systems integrate multiple independent assessments to produce normalized, scalable ratings that better reflect consensus quality and performance metrics.

Wisdom of Crowds Assessment

Single rater appraisal relies on individual judgment, which can introduce bias and limit perspective diversity, whereas crowd-sourced appraisal leverages the wisdom of crowds by aggregating multiple independent evaluations, enhancing accuracy and reducing error. Studies in review systems demonstrate that collective assessments better predict true quality and provide more balanced insights compared to single rater judgments.

Single-point-of-failure Review

Single rater appraisal systems pose a single-point-of-failure risk due to dependence on one individual's subjective judgment, which can lead to biased or inconsistent reviews. Crowd-sourced appraisal mitigates this risk by aggregating multiple independent evaluations, enhancing reliability and reducing the impact of any single erroneous or biased rating.

Participatory Performance Scoring

Single Rater Appraisal offers focused, expert evaluations, ensuring consistent and in-depth feedback, while Crowd-sourced Appraisal enhances Participatory Performance Scoring by aggregating diverse perspectives, increasing reliability through collective judgment. Integrating Crowd-sourced Appraisal leverages broader stakeholder engagement to reduce individual bias and improve the accuracy of review systems.

Single Rater Appraisal vs Crowd-sourced Appraisal for review systems Infographic

hrdif.com

hrdif.com